Flaky Tests

Video Tutorial: How to use flaky test detector

The flaky test dashboard shows unreliable tests in your projects. This page explains how they work and how to interpret the flaky test dashboard.

Overview

The flaky test tab helps you find flaky tests in your suite. Flaky tests are tests that fail seemingly random without any obvious cause. Identify flaky tests to improve the reliability of your pipeline.

What is the definition of flaky tests?

A test is considered flaky when one of these conditions happen:

- The test produces different results for the same Git commit

- A passing test that begins to behave unreliably once merged into a branch

How to set up flaky detection

Flaky test detection is automatically enabled once test reports are configured. No additional steps are needed to set up flaky tests.

It may take a few pipeline runs before flaky tests begin to appear in the flaky test tab

How to view flaky tests

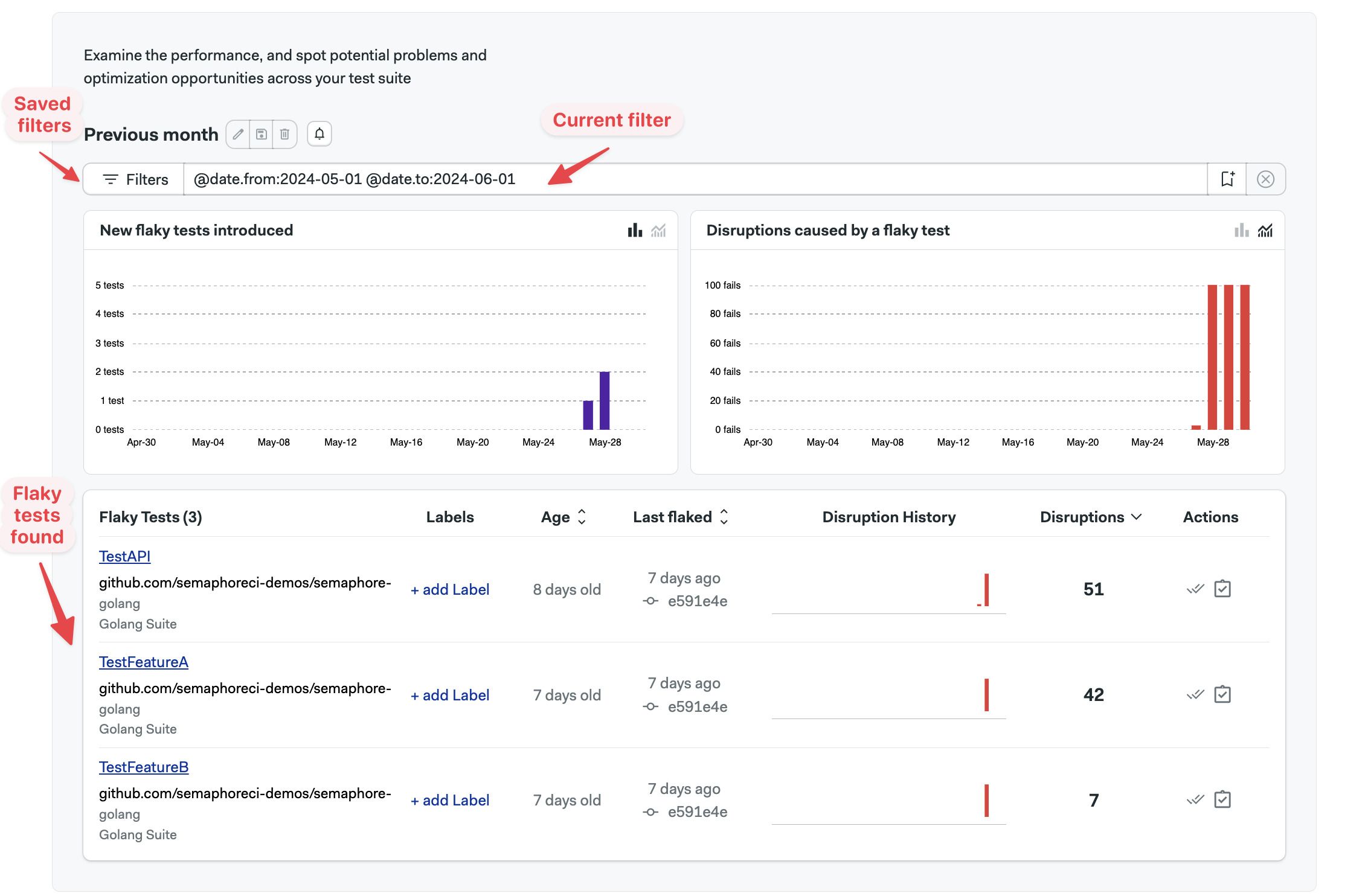

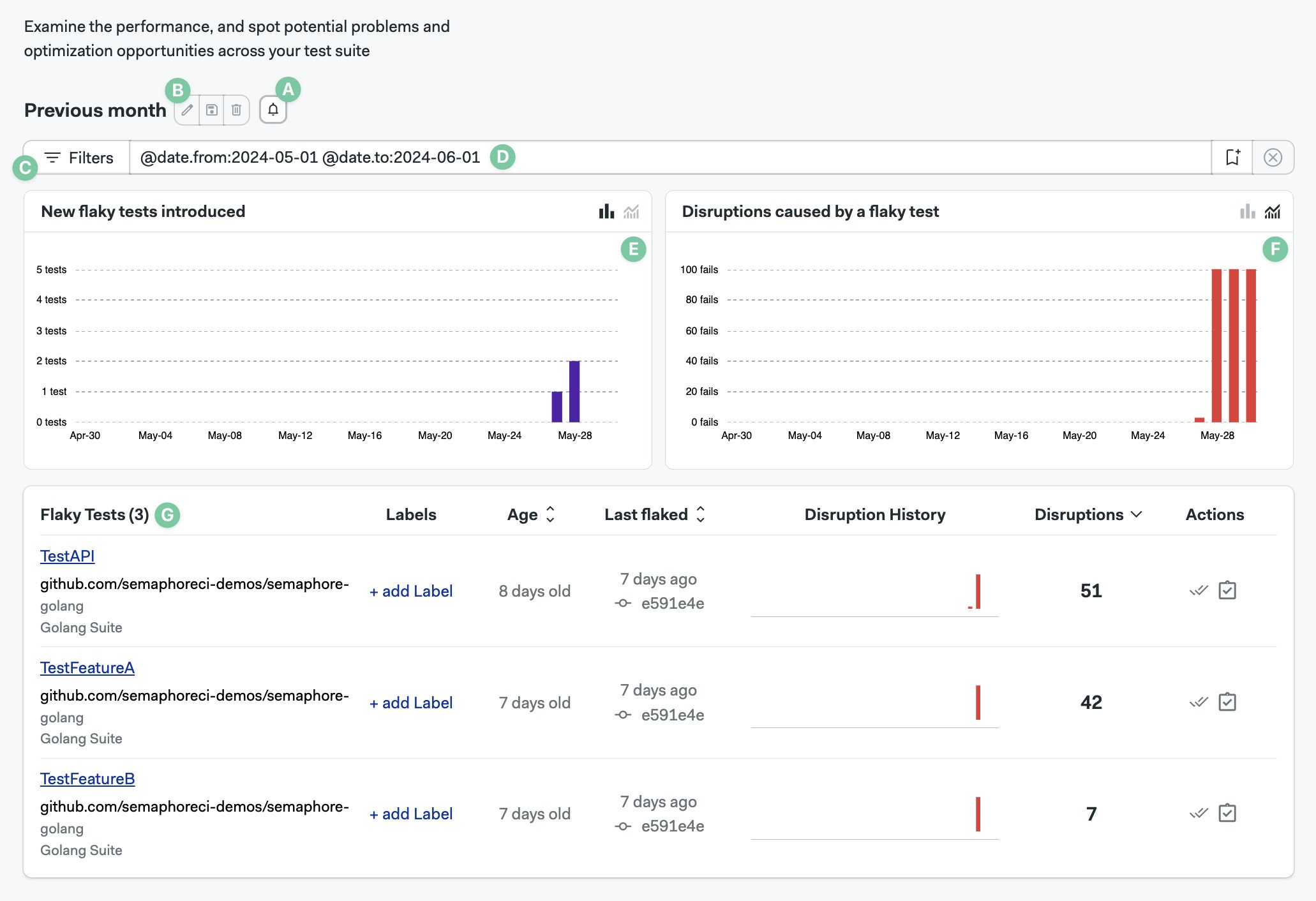

Open your project on Semaphore and go to the Flaky tests tab.

The dashboard shows:

- A. Create notification button (bell icon)

- B. Edit filters buttons

- C. Saved filters

- D. The current filter

- E. New flaky tests found in the filtered view

- F. Flaky test disruptions by date in the filtered view

- G. The list of flaky tests

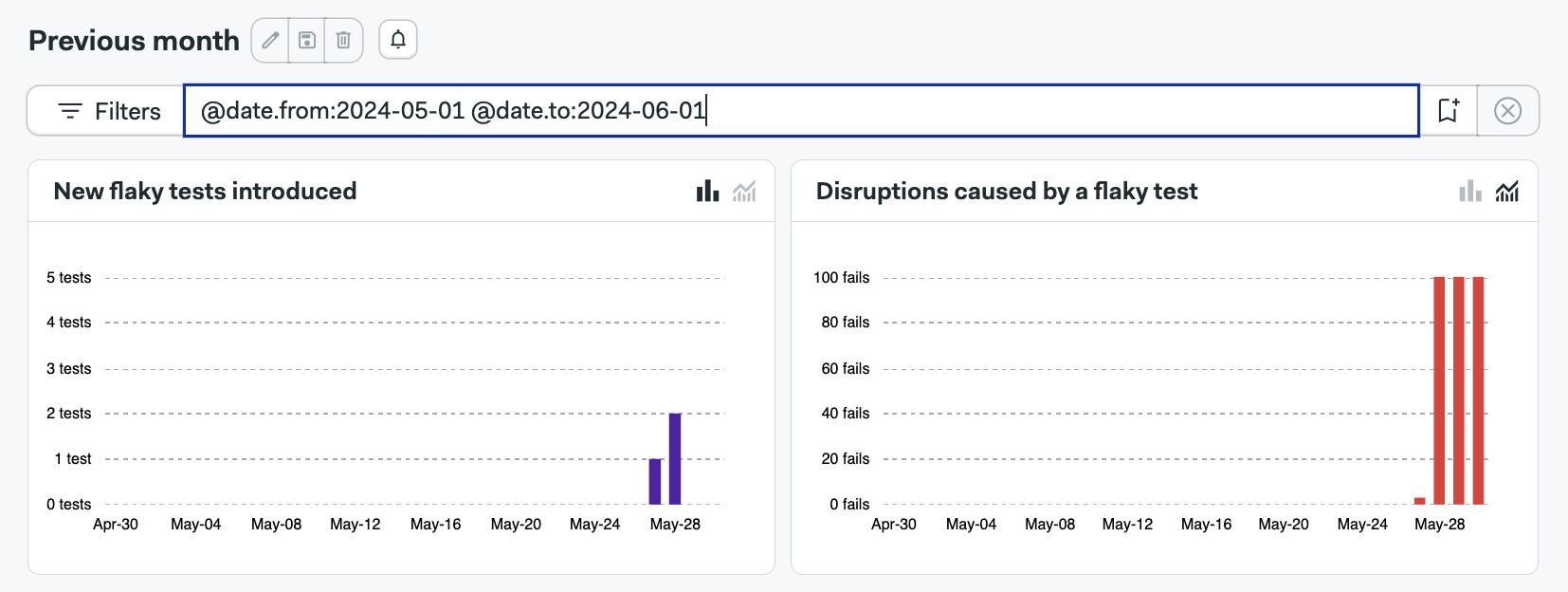

Charts

The New Flaky Tests show new flaky tests detected in the selected period.

The Disruptions Caused by a Flaky Test shows how many times a flaky test failed disrupting your test suite in the selected period.

You can switch between daily occurrences and cumulative views on both charts.

Filtering the view

You can define, edit, and save filters to view the flaky test data in different ways.

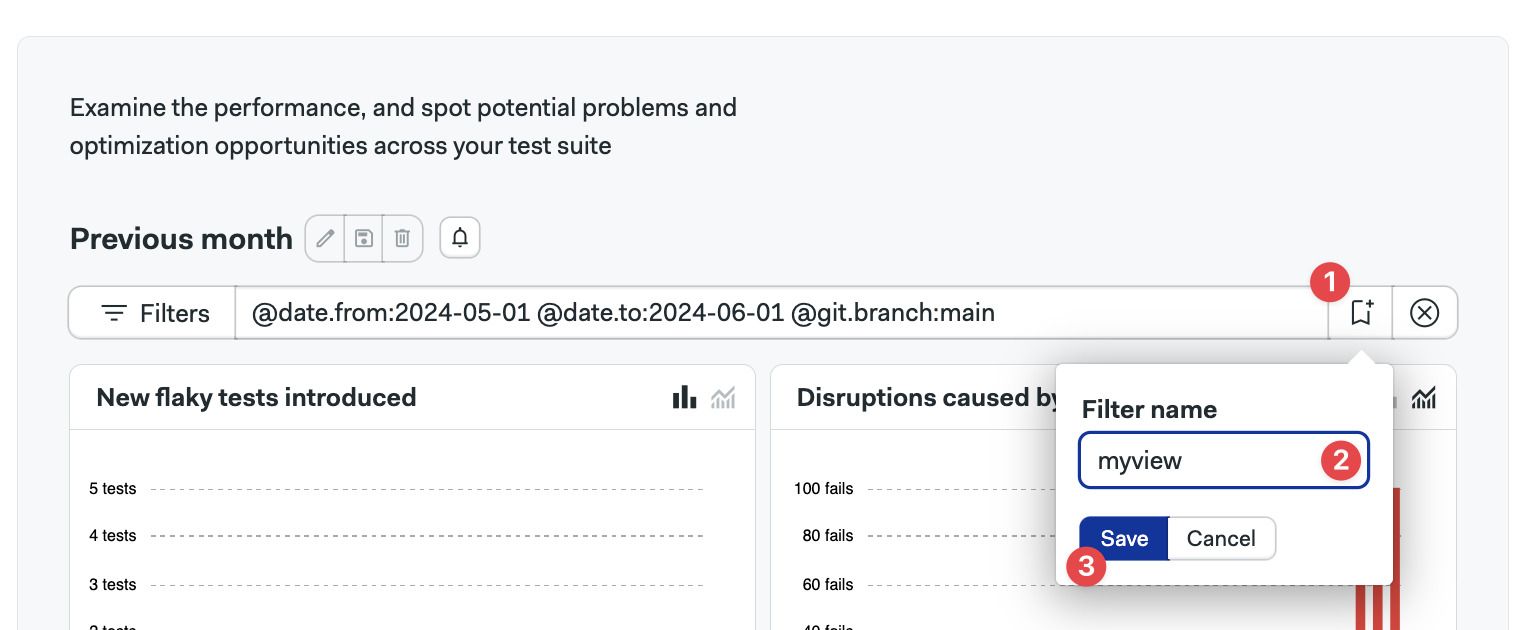

How to create a filter

To create a filter, type the filter key in the filter box and press Enter/Return. The view will reload with the new data.

Once you have filter key you like, you can save it by:

- Pressing the Create new filter button

- Typing your filter name

- Pressing Save

Filter keys

You can combine multiple filter keys to create a filter that suits your needs. The available filter keys are:

| Filter key | Filter Name | Filter Type |

|---|---|---|

| @git.branch | Git branch | text |

| @git.commit_sha | Git commit sha | text |

| @test.name | Test name | text |

| @test.group | Group that the test is assigned to | text |

| @test.file | Test file | text |

| @test.class.name | Name of the test class | text |

| @test.suite | Name of the test suite | text |

| @test.runner | Name of the test runner | text |

| @metric.age | Time passed (days) since first flake | numeric |

| @metric.pass_rate | Pass rate of a test | numeric |

| @metric.disruptions | Number of disruptions of a test | numeric |

| @label | Assigned label of the test | text |

| @is.resolved | Filter by resolved or unresolved tests | boolean |

| @is.scheduled | Filter by scheduled or unscheduled tests | boolean |

| @date.from | Starting date range | text |

| @date.to | End of filtering range | text |

Text filter keys

Text filters allow you to specify values using alphanumeric characters. Wildcards (* or %) can be used to match patterns.

Examples include:

- Test name:

@test.name:"TestAPI" - Git branch:

@git.branch:main - Test runner:

@test.runner:"golang" - labels:

@label:"new"

The @date.from and @date.to are special cases of text keys. This keys accept:

- Values in ISO date format:

YYYY-MM-DD - Values from now:

now-30dornow-10d

Numeric filter keys

Numeric keys allow you to filter based on numerical values.

Numeric keys accept:

- Greater than (

>) - Less than (

<) - Equal to (

=) - Not equal to (

!=) - Greater than or equal to (

>=) - Less than or equal to (

<=)

Examples include:

- Test age:

@metric.age:<10shows tests less than 10 days old - Disruptions:

@metric.disruptions:>30show tests with more than 30 disruptions - Pass rate:

@metric.pass_rate:>20shows tests with greater than 20% pass rate

Boolean filter keys

Boolean keys accept true or false.

Examples of binary filter keys are:

- Tests not marked as resolved:

@is.resolved:false - Test without associated tickets:

@is.scheduled:false

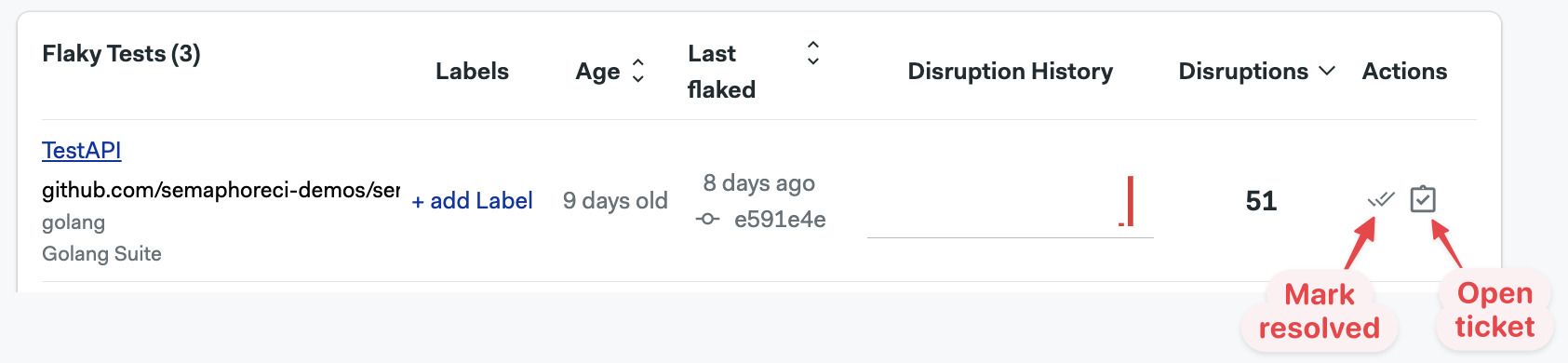

Taking actions on tests

The lower part of the flaky test dashboard shows the tests detected.

You can take the following actions on these:

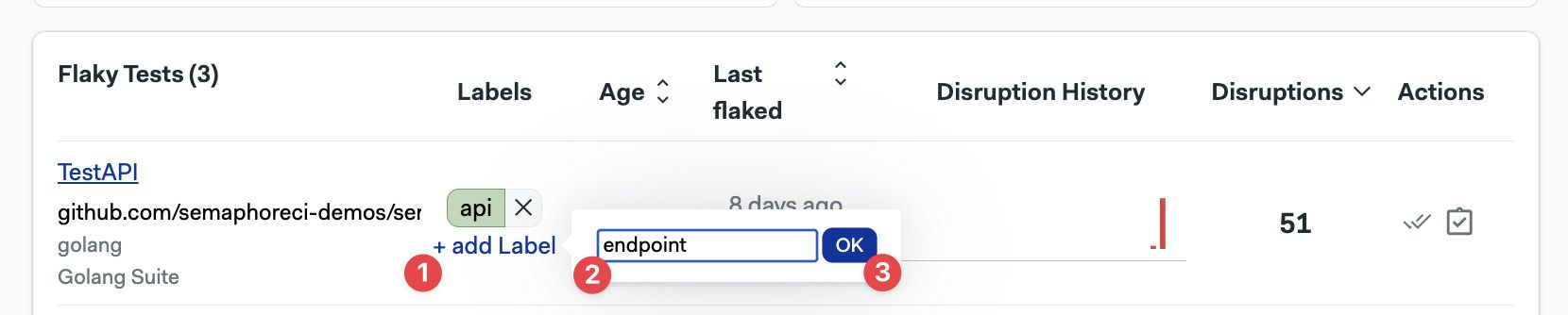

Adding labels

To add labels to a flaky test:

- Press the +Add label button

- Type the label

- Press OK

To add more labels, repeat the same steps. You can have up to three labels per test.

You can now use the @label key to filter tickets by label. In addition, clicking on the label shows a filtered view with all the tests linked to the same label.

Marking tests as resolved

Test marked as resolved test won't be shown by default. Can be filtered in back again with the @is.resolved: true filter key. You can also unmark the test to show it again in the default view

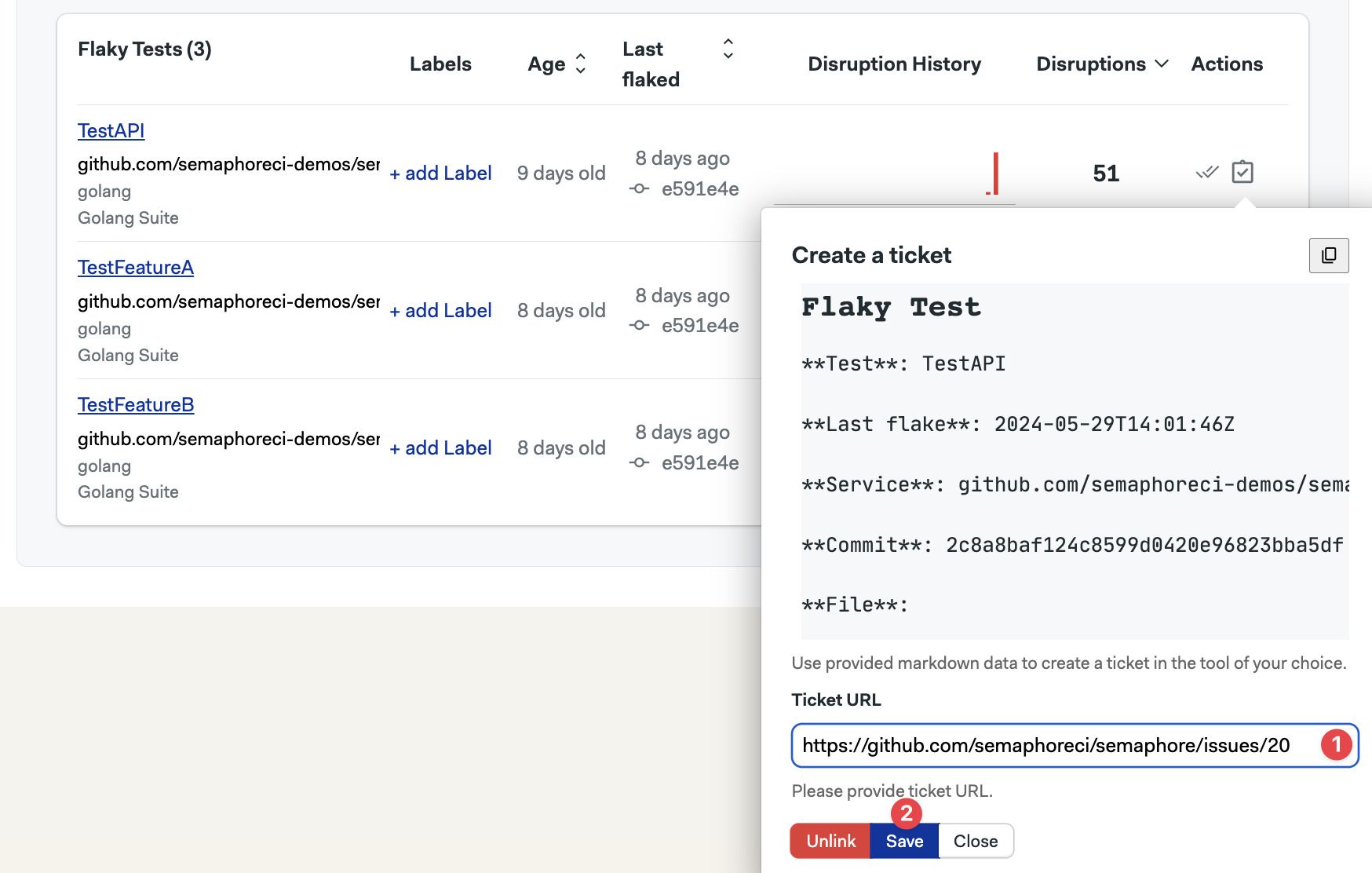

Link test to a ticket

Pressing the Open ticket action button lets you link the test to an issue. Linked tickets can be filtered with the @is.scheduled:true filter key.

To use this feature, create a ticket in your tool of choice, e.g. GitHub and:

- Copy and paste the ticket URL

- Press Save

The ticket window shows details on Markdown you can use to create your ticket.

You can also unlink a test from the issue from this window.

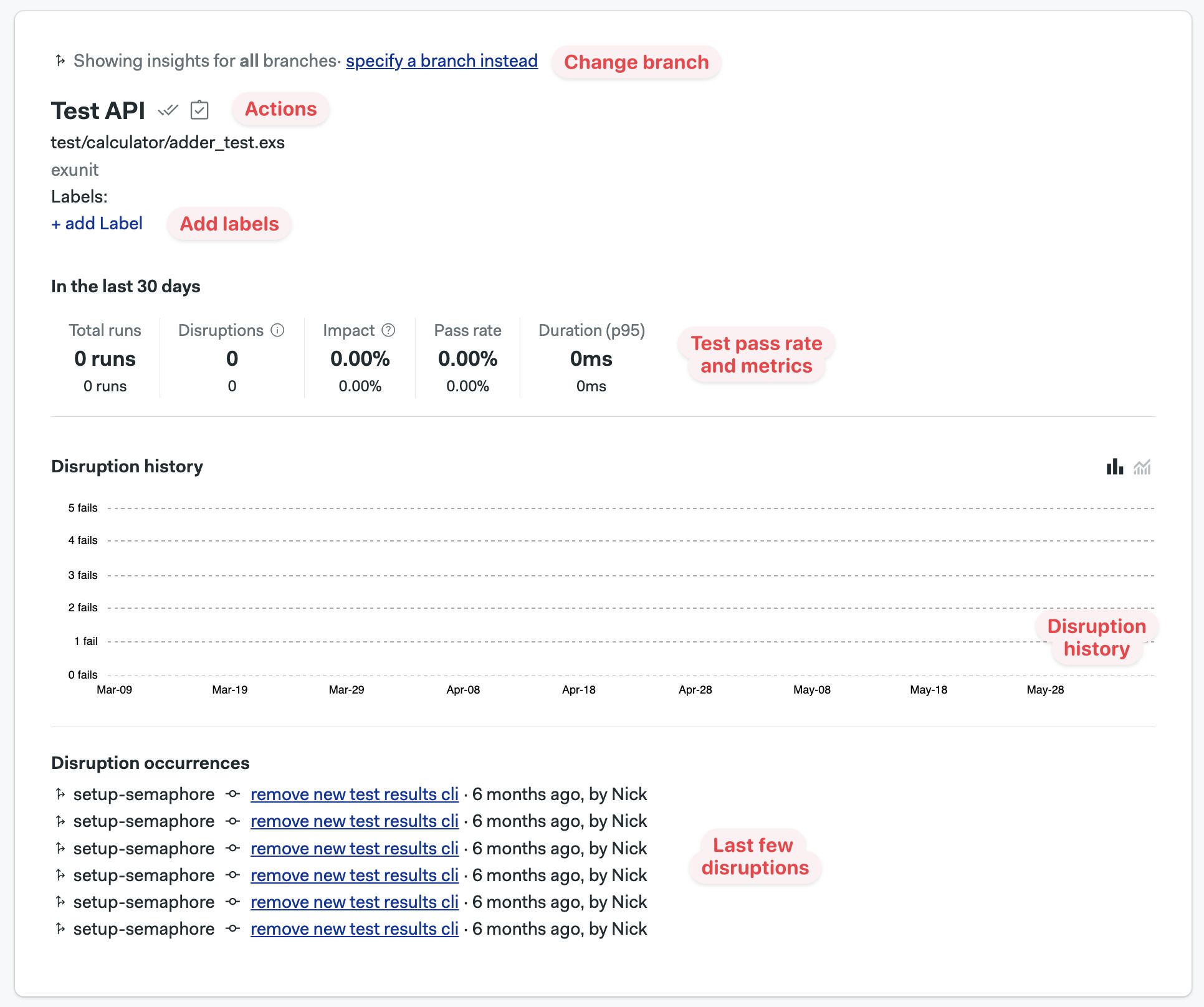

Detailed view

Clicking on a flaky test opens the detailed view for that test.

You can take the same actions in this windows. You can add labels, mark as resolved, and link to a ticket.

You also ll find aggregated data detailing the impact of the selected flaky test, such as the P95 runtime, total number of runs, and the pass rate of the test. In addition, you can see the last flaky disruptions in the for the test.

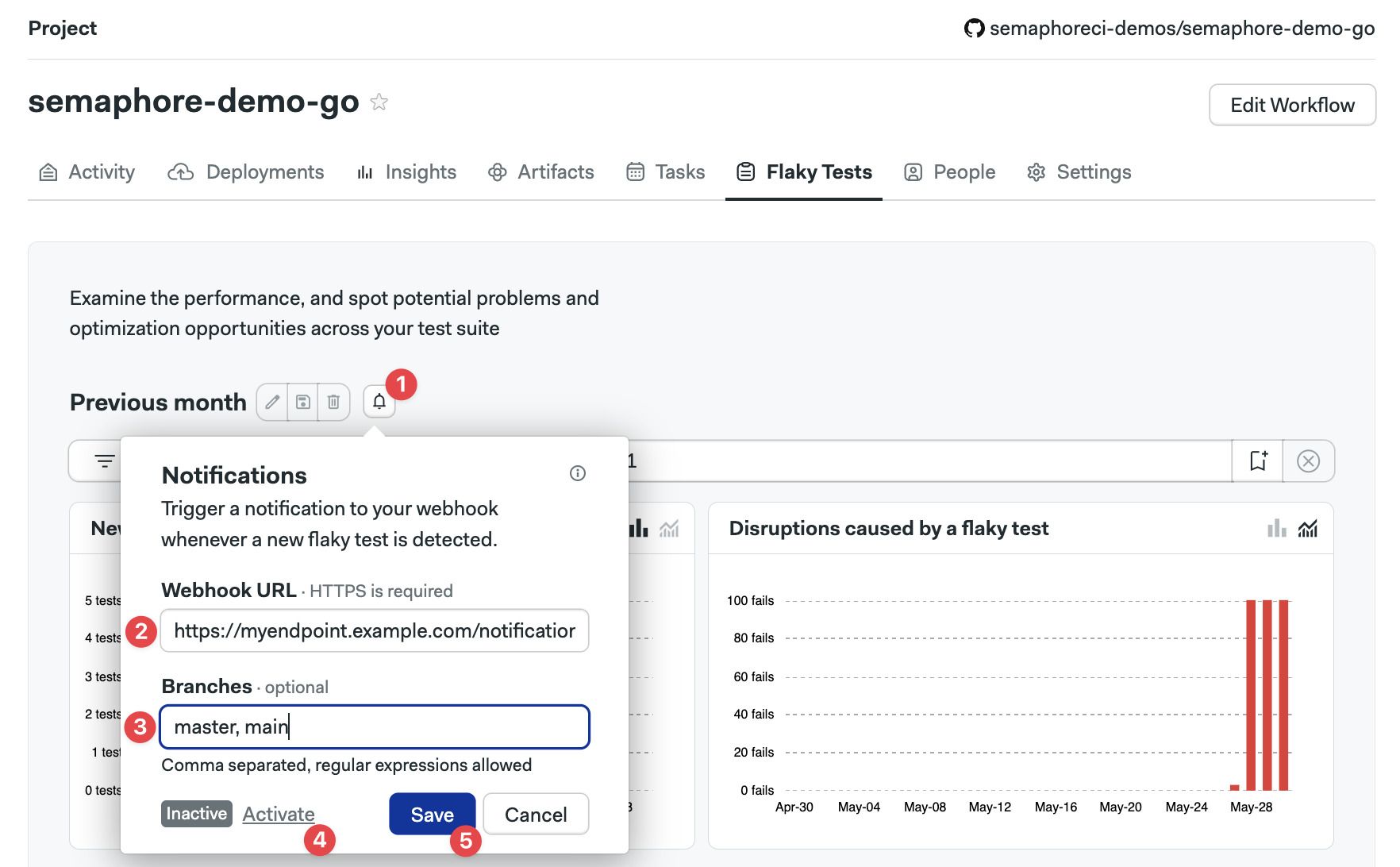

Notifications

You can send webhook-based notifications when new flaky test is detected.

Notifications can be enabled from the main Flaky Test dashboard:

- Press the bell icon

- Enter the webhook url, only HTTPs is allowed

- Optionally, type the branch names for which you want to receive notifications. Leave blank to enable all branches

- Press Activate

- Press Save

You can use basic regular expressions in the branches field. For example, you can use patterns like release-* or .*.. If you wish to specify multiple branches, you can separate them using a comma.

The branches field has a limit of 100 characters.

Notification payload

When notifications are enabled, Semaphore sends an HTTPs POST request with Content-Type: application/json and the schema shown below. The endpoint show return a 2XX response status code.

If the endpoint doesn't respond with a 2XX code, Semaphore attempts to resend the request four additional times using an exponential backoff strategy.

The notification sends a JSON payloads like the example shown below:

{

"id": "a01e9b47-7e3c-4165-9007-8a3c1652b31a",

"project_id": "4627d711-4aa2-xe1e-bc5c-e0f4491b8735",

"test_id": "3177e680-46ac-4c39-b9fa-02c4ba71b644",

"branch_name": "main",

"test_name": "Test 1",

"test_group": "Elixir.Calculator.Test",

"test_file": "calculator_test.exs",

"test_suite": "suite1",

"created_at": "2025-03-22T18:24:34.479219+01:00",

"updated_at": "2025-03-22T18:24:34.479219+01:00"

}

Notification schema

The complete schema for the notification is shown below:

type: object

properties:

id:

type: string

format: uuid

example: "a01e9b47-7e3c-4165-9007-8a3c1652b31a"

project_id:

type: string

format: uuid

example: "4627d711-4aa2-xe1e-bc5c-e0f4491b8735"

test_id:

type: string

format: uuid

example: "3177e680-46ac-4c39-b9fa-02c4ba71b644"

branch_name:

type: string

example: "main"

test_name:

type: string

example: "Test 1"

test_group:

type: string

example: "Elixir.Calculator.Test"

test_file:

type: string

example: "calculator_test.exs"

test_suite:

type: string

example: "suite1"

created_at:

type: string

format: date-time

example: "2025-03-22T18:24:34.479219+01:00"

updated_at:

type: string

format: date-time

example: "2025-03-22T18:24:34.479219+01:00"

required:

- id

- project_id

- test_id

- branch_name

- test_name

- test_group

- test_file

- test_suite

- created_at

- updated_at