Working with Docker

Use Semaphore to build, test, store, and deploy Docker images to production. This page explains how to use Docker inside Semaphore.

This page describes how to build, test, and publish Docker images using Semaphore. If you want to run jobs inside Docker containers, see the Docker environments page.

Overview

Docker comes preinstalled in all Semaphore Machines. You can use the well-known Docker command line tool to build and run containers inside Semaphore.

How to build a Docker image

You can use the docker command line tool inside a Semaphore job. In most cases, you should also run checkout to clone the repository in order to get access to the code and Dockerfile.

In the following example, an image is built. Since the job doesn't push it into a Docker registry, the image is lost as soon as the job ends. Authenticate with a registry to save your image.

checkout

docker build -t my-image .

docker images

Using Dockerfiles

The following example shows a Dockerfile that builds an image containing a Go application:

FROM golang:alpine

RUN mkdir /files

COPY hello.go /files

WORKDIR /files

RUN go build -o /files/hello hello.go

ENTRYPOINT ["/files/hello"]

The Dockerfile is shown above:

- Downloads the Go official image

- Creates a directory called

files - Copies the compiled Go binary to the new directory

- Runs the binary inside the Docker container

To build and run the image, add the following commands to your job:

checkout

docker build -t hello-app .

docker run -it hello-app

Due to the introduction of Docker Hub rate limits, Semaphore automatically redirects image pulls from the Docker Hub repository to the Semaphore Container Registry.

Docker layer caching

Docker images are organized as layers, which you can leverage to speed up the build process on large images.

In order to leverage layer caching you can modify your Docker build job as follows

-

Authenticate with the Docker registry of your choice. For example for Docker Hub:

echo $DOCKER_PASSWORD | docker login --username "$DOCKER_USERNAME" --password-stdin -

Download the base or latest image from the Docker registry

docker pull "$DOCKER_USERNAME"/my-image-name:latest -

Add the

--cache-fromargument to the build commandcheckout

docker build \

--cache-from "$DOCKER_USERNAME"/my-image-name:latest \

-t "$DOCKER_USERNAME"/my-image-name:$SEMAPHORE_WORKFLOW_ID .In this example, we're using the

SEMAPHORE_WORKFLOW_IDbecause it provides a unique ID for each build but you can use any other tagging strategy.See Semaphore Environment Variables for other available variables you might use in the build like

$SEMAPHORE_GIT_BRANCH. -

Push the latest build to the Docker registry

docker tag "$DOCKER_USERNAME"/my-image-name:$SEMAPHORE_WORKFLOW_ID "$DOCKER_USERNAME"/my-image-name:latest

docker push "$DOCKER_USERNAME"/my-image-name:latest

The build command should complete faster as only the layers that changed in the Dockerfile need to be rebuilt.

How to authenticate to Docker registries

To save the image built in the section above or access private images, you must first authenticate with a Docker registry like DockerHub, AWS Elastic Container Registry, or Google Cloud Container Registry.

Using DockerHub

The following example shows how to authenticate with Docker Hub so we can push images:

checkout

echo $DOCKER_PASSWORD | docker login --username "$DOCKER_USERNAME" --password-stdin

docker build -t hello-app .

docker tag hello-app "$DOCKER_USERNAME"/hello-app

docker push "$DOCKER_USERNAME"/hello-app

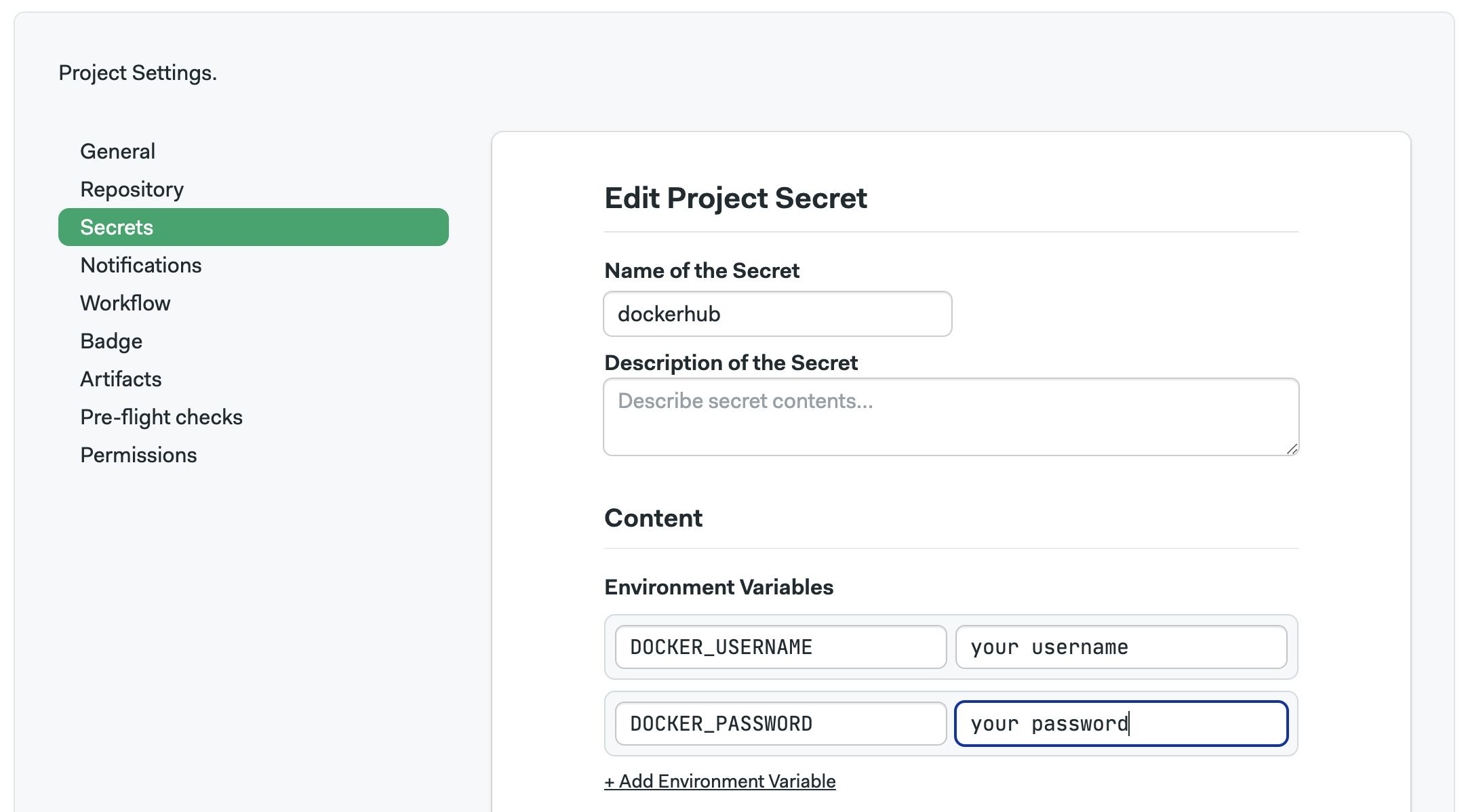

The example above assumes there are a secret containing your DockerHub credentials using the environment variables DOCKER_USERNAME and DOCKER_PASSWORD. The password field can either contain your account password or a Docker Access Token, the command remains the same.

You can verify that you have successfully logged by running:

$ docker login

Login Succeeded

Using AWS ECR

To access your AWS Elastic Container Registry (ECR) images:

-

Create a secret containing the variables

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY -

Enable the secret in your job

-

Define the environment variables

AWS_DEFAULT_REGIONandECR_REGISTRY -

Type the following commands in your block prologue

Prologuesudo pip install awscli

aws ecr get-login --no-include-email | bash -

Type the following commands in your job:

Job commandscheckout

docker build -t example .

docker tag example "${ECR_REGISTRY}"

docker push "${ECR_REGISTRY}"

Using Google Cloud GCR

To access your Google Cloud Container Registry (GCR) images:

-

Create a secret with your Google Cloud access credential file (

$HOME/.config/gcloud/application_default_credentials.json) -

Enable the secret in your job

-

Define the environment variables

GCR_URLwith the URL of your registry, e.g.asia.gcr.io, andGCP_PROJECT_ID -

Type the following commands in your block prologue

Prologuegcloud auth activate-service-account

gcloud auth configure-docker -q -

Type the following commands in your job:

Job commandscheckout

docker build -t example .

docker tag example "${GCR_URL}/${GCP_PROJECT_ID}/example"

docker push "${GCR_URL}/${GCP_PROJECT_ID}/example"

Using other registries

In order to pull and push images to other Docker registries such as JFrog or Quay, create a suitable secret and use the following commands:

echo $DOCKER_PASSWORD | docker login --username "$DOCKER_USERNAME" --password-stdin registry.example.com

docker pull registry-owner/image-name